Rule-Based Replica Assignment for SolrCloud

When Solr needs to assign nodes to collections, it can either automatically assign them randomly or the user can specify a set nodes where it should create the replicas. With very large clusters, it is hard to specify exact node names and it still does not give you fine grained control over how nodes are chosen for a shard. The user should be in complete control of where the nodes are allocated for each collection, shard and replica. This helps to optimally allocate hardware resources across the cluster.

Rule-based replica assignment is a new feature coming to Solr 5.2 that allows the creation of rules to determine the placement of replicas in the cluster. In the future, this feature will help to automatically add/remove replicas when systems go down or when higher throughput is required. This enables a more hands-off approach to administration of the cluster.

This feature can be used in the following instances:

- Collection creation

- Shard creation

- Replica creation

Common use cases

- Don’t assign more than 1 replica of this collection to a host

- Assign all replicas to nodes with more than 100GB of free disk space or, assign replicas where disk space is more

- Do not assign any replica on a given host because I want to run an overseer there

- Assign only one replica of a shard in a rack

- Assign replica in nodes hosting less than 5 cores or assign replicas in nodes hosting least number of cores

What is a rule?

A rule is a set of conditions that a node must satisfy before a replica core can be created there. A rule consists of three conditions:

- shard – this is the name of a shard or a wild card (* means for each shard). If shard is not specified, then the rule applies to the entire collection

- replica – this can be a number or a wild-card ( * means any number zero to infinity )

- tag – this is an attribute of a node in the cluster that can be used in a rule .eg: “freedisk” “cores”, “rack”, “dc” etc. The tag name can be a custom string. If creating a custom tag, a Solr plugin called a snitch is responsible for providing tags and values.

Operators

A condition can have one of the four operators

- equals (no operator required) : tag:x means tag value must be equal to ‘x’

- greater than (>) : tag:>x means tag value greater than ‘x’. x must be a number

- less than (<) : tag:<x means tag value less than ‘x’. x must be a number

- not equal (!) : tag:!x means tag value MUST NOT be equal to ‘x’. The equals check is performed on String value

Examples

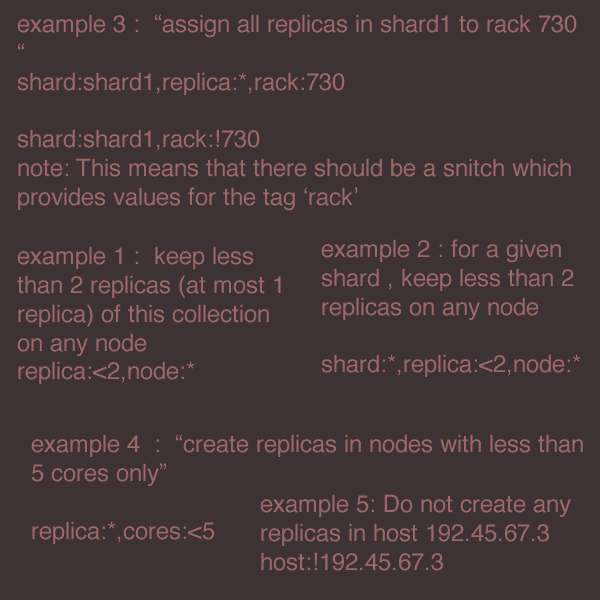

example 1 : keep less than 2 replicas (at most 1 replica) of this collection on any node

replica:<2,node:*

example 2 : for a given shard , keep less than 2 replicas on any node

shard:*,replica:<2,node:*

example 3 : “assign all replicas in shard1 to rack 730 “

shard:shard1,replica:*,rack:730

default value of replica is *. So, it can be omitted and the rule can be reduced to

shard:shard1,rack:730

note: This means that there should be a snitch which provides values for the tag ‘rack’

example 4 : “create replicas in nodes with less than 5 cores only”

replica:*,cores:<5

or simplified as,

cores:<5

example 5: Do not create any replicas in host 192.45.67.3

host:!192.45.67.3

fuzzy operator (~)

This can be used as a suffix to any condition. This would first try to satisfy the rule strictly. If Solr can’t find enough nodes to match the criterion, it tries to find the next best match which may not satisfy the criterion

For example, best match . freedisk:>200~. Try to assign replicas of this collection on nodes with more than 200GB of free disk space and if that is not possible choose the node which has the most free disk space

Choosing among equals

The nodes are sorted first and the rules are used to sort them. This ensures that even if many nodes match the rules, the best nodes are picked up for node assignment. For example, if there is a rule that says “freedisk:>20” nodes are sorted on disk space descending and a node with the most disk space is picked up first. Or if the rule is “cores:<5”, nodes are sorted with number of cores ascending and the node with least number of cores is picked up first.

Snitch

Tag values come from a plugin called Snitch. If there is a tag called ‘rack’ in a rule, there must be Snitch which provides the value for ‘rack’ for each node in the cluster . A snitch implements Snitch interface . Solr, by default, provides a default snitch which provides the following tags

- cores : No:of cores in the node

- freedisk : Disk space available in the node

- host : host name of the node

- node: node name

- sysprop.{PROPERTY_NAME} : These are values available from system properties. sysprop.key means a value that is passed to the node as -Dkey=keyValue during the node startup. It is possible to use rules like sysprop.key:expectedVal,shard:*

How are Snitches configured?

It is possible to use one or more snitches for a set of rules. If the rules only need tags from default snitch it need not be explicitly configured.

example:

snitch=class:fqn.ClassName,key1:val1,key2:val2,key3:val3

How does the system collect tag values?

- Identify the set of tags in the rules

- Create instances of Snitches specified. The default snitch is created anyway

- Ask each snitch if it can provide values for the any of the tags. If, even one tag does not have a snitch, the assignment fails

- After identifying the snitches, ask them to provide the tag values for each node in the cluster

- If the value for a tag is not obtained for a given node , it cannot participate in the assignment

How to configure rules?

Rules are specified per collection during collection creation as request parameters. It is possible to specify multiple ‘rule’ and ‘snitch’ params as in this example:

snitch=class:EC2Snitch&rule=shard:*,replica:1,dc:dc1&rule=shard:*,replica:<2,dc:dc3

These rules are persisted in the clusterstate in Zookeeper and are available throughout the lifetime of the collection. This enables the system to perform any future node allocation without direct user interaction

LEARN MORE

Contact us today to learn how Lucidworks can help your team create powerful search and discovery applications for your customers and employees.