The Well Tempered Search Application – Fugue

or “Tarballs II – The Search for More Money”

(First a shout-out to the one and only Mel Brooks for inspiring the subtitle – and for Mel’s benefit – a “tarball” or .tar.gz file – the ‘t’ stands for “tape” believe it or not – is a thing that we computer guys use to ship stuff around our version of hyperspace, including downloads of Open Source software. Open Source is a killer idea Mel, a real modern day “David and Goliath” story (no not Gladwell’s awesome book) – I’ve got some script ideas if you’re interested. These tarball things only work on another Open Source thing, Linux – our favorite OS, not Windows – the OS made by those guys in Redmond which sometimes makes your computer act like it has real tarballs in it. But anyway, gotta go, huge fan, sir, keep ‘em coming as long as you can. God bless and say Hi to Carl for me. Lets do lunch sometime.)

This is the second in a two-part series on creating killer Search Apps, preferably with Apache Solr, which is without question the best search engine on the planet (at least in my mind, but since this is my Company’s web site after all and we are the Solr guys and gals, my opinion shouldn’t surprise you – but if you corner me after a few beers at Lucene-Solr Revolution sometime, I’ll bet you that I will say the same thing – even if none of my awesome coworkers are in ear shot.) Anyway, if you read my previous post, you may remember that I had a few axes that needed grinding (or at least I thought so) and because of too much ranting, I had to leave without saying too much that is new and exciting. I’ll do my best to redress that failure in this installment. There may be a bit more blood on the table before I’m through, but everybody should be able to leave the room under their own power. The shout-outs are to our Royal family who were down in the pits once too (software magnates that is) and like Charles, William, Harry and Liz I don’t need to tell you their full names (can you call the Queen by a nickname? – probably not but hey I’m an American – but then again maybe Phil calls her Beth or Bess – have to research that one – I wonder if Google or Wikipedia knows?) If you don’t know who any of these other guys are, the rest of this blog will not make too much sense to you either.

Our Main Exposition – musically speaking

The idea that I am pursuing (beating to death?) in these posts is that the basic “bag-of-words” search paradigm is semantically challenged in a serious way and by injecting some semantic awareness into the process, we can get it to think more like we do (or give it that illusion) which makes the search “conversation” easier for us. And while we are not even coming close to passing the Turing test here, there are some tricks that we can pull to fake it at least well enough to make our users happier; which at the end of the day is all that matters (notice that I said ‘happier’ – that’s key to my message). These techniques are not killer expensive but they do help build killer search apps. And pay attention here, especially you eCommerce guys, what you want is a killer search app, what you don’t want is a search app that kills all hope of getting sales.

Contrapuntal Variation I: Autophrasing – or when more than one word means just one thing

I’m not going to spend a lot of time on this one here because I have already written a pair of blogs on this subject that you might want to check out. Just put the term “autophrasing” into the web search engine of your choice and you should find them but in case you don’t want to do that, here are the hyperlinks[1, 2] – there, I just increased my Google Page Rank (thanks again Larry for thinking of this technique and naming it after yourself). As you already may know – like most of the world – I use the web search engine that those two guys at Stanford invented back when they were young, poor and more community minded (sorry Bill I’d use Bing but after working on Fast Search for Sharepoint 2010 for a year or so, I try to stay away from your stuff as much as I can – still have nightmares – but kudos on your once internally code named “Java-Killer”: now known as C# – really sweet language, yes indeed – but as you must know by now – it didn’t – ask the other Larry – the C# thing is kinda like when your Mom told you about the Web, but don’t get me started on IE or the Windows Phone – as we say in New Jersey ‘fuhgeddaboudit’!). If you want a good laugh, check out Brin & Page’s academic paper on how the web search engines of the day that they competed with like Alta Vista were too “commercial” and theirs wasn’t – see “8 Appendix A: Advertising and Mixed Motives” – nice pics of the boys too – because it was a dot EDU hosted by Stanford (where the internet actually originated – in Academia – and believe me schools like Stanford are commercial mega-beasts too just like B of A).

(Another reason for Brin & Page’s initial success is that the existing search sites REALLY sucked. Both of them were graduate students at the time, now they are both godzillionaires – hmmm, I wonder if Larry and/or Sergey ever finished their PhD? – might just increase their earning potential don’t you think? – but hell, Zuckerberg didn’t even finish his bachelors degree – ah well, overeducated and underpaid, at least I got to wear that cool hood on my graduation gown. In any case, the REAL genius of the commercial version of their engine is that these guys figured out how to sell search terms for bazillions without mucking up the free search thing, which is what they were ranting about in their paper – the use of simple graphic techniques like background color is a killer when done right – and of course, screen location, location, location.)

Autophrasing is a good tool to limit false positives because it puts some semantic awareness into search; but like all things it is just one tool in your arsenal, you shouldn’t use it to hammer in all of your nails. It basically tells the Lucene search engine – “No, this phrase represents one thing, so don’t break it up (tokenize)”. In some use cases, this can really cut through the noise like a hot knife through butter, but like synonyms it requires some care and feeding – I love the term curation in this context – so it is not really ‘free’ because it requires some sweat equity.

Syntax vs. semantics – another point that I want to emphasize here is the use of autophrasing with synonyms and why you should do that rather than trying to solve the multi-term problem with synonyms alone. This was covered in my second blog on autophrasing, but the gist of it is that using synonyms as a workaround for the multi-term problem has lots of edge cases because in Lucene, the Synonym TokenFilter works at the level of syntax rather than semantics (token positions). Autophrasing solves the problem at the semantic level by mapping phrases to entities or concepts and that is why it works so well in many use cases. Synonym mapping downstream from autophrasing can then take advantage of the more natural one-to-one token mapping that the Lucene analysis chains thrive on (and proper care and feeding of these “analysis chains” can make you some serious money – especially you eCommerce guys).

Like another problem that we will look at in a moment, search has more difficulty “doing the right thing” when users add search terms because the user is trying to make the search more precise by doing so and this often goes south because of bag-of-words (BOW) search. (I was thinking of coining BOW-WOW, which would stand for Bag of Words – What Owful Wesults – as if spoken by a spelling-challenged Elmer Fudd, but decided against it). Autophrasing is a good way to cut through some of this chaff by tightening (as much as can be) the mapping between terms and things. But as I said, it can’t go all the way because not all multi-word problems fall into this category.

Variation II: The ‘red sofa’ problem

This one came up in a customer engagement, but on thinking about it for awhile it turns out to be a really good example of when increased linguistic precision actually causes decreased search precision for an OOTB (i.e. unassisted) search app. The linguistic thing here is about qualifiers or adjectives. The term ‘red’ in this case qualifies ‘sofa’ by restricting the set of things called ‘sofa’ to things that are both sofas and are ‘red’ in color. In other words, there is an implied AND in this phrase (in the boolean sense). So if I were a savvy search user and understood that the search engine will not know this and will treat this as a boolean OR by default – returning both red things and things that are sofas, only some of which are red sofas – i.e. lots of false positives, I could do ‘red AND sofa’ (some search query languages like the Solr dismax parser let you use all upper case to distinguish booleans from vernacular usages which can then be removed as stop words) – however, most users are not going to do this (trust me) because as I said before, they don’t know what AND and OR really mean to us math freaks. Its actually not their fault – as explained in my previous post – “and” and “or” are ambiguous in natural language –sometimes they conform to boolean logic, sometimes they don’t – language is fickle and that’s part of our problem.

Understanding when this linguistic qualification process is happening in a phrase is an NLP problem so if we were really smart, we could attack it that way. You could probably solve it with autophrasing, but you would have to cover all of the possible permutations – i.e. it could be made to work but its not a great fit (have you ever tried to pound in a deck screw with a hammer?).

A more elegant but still K-Mart priced approach would be to recognize that red is a color and to put together a list of common colors and use that to detect their presence in a query (i.e. a poor man’s entity detection strategy known as white listing). We can then detect these terms in the token stream of the query, yank them out and create a filter query (if we are smart enough to use Lucene-Solr) so that q=red sofa becomes:

q = sofa fq = color:red

Now, if we have pre-categorized our sofa records according to color and have the appropriate metadata in place, voila – the free text query ‘red sofa’ just brings back red sofas! Hallelujah! – It’s Miller Time! (Although I really don’t like Miller Lite – you know the one about why American Light beer is like making love in a canoe don’t you? – didn’t know this – thanks Uncyclopedia – its an Oscar Wilde quote – cool! – but I added the ‘light’ part because thanks to Jimmy Carter and Sam Adams it’s no longer true in general). Getting back to the subject at hand, what we have basically done with this nifty keen query rewrite is to short circuit the faceted search scenario where the user first searches for ‘sofa’ and then clicks on the ‘red’ color facet, but it appears to be magic (ask James Randi – magic is all about illusion). People just like typing into the search box and thanks again to the Wizards of Mountain View they want it to come back wicked fast and do the right thing.

(Update for those of you that like to play with code (fire?) here is a github link to a simple implementation of this idea. It doesn’t have things like support for mult-word category terms (need to do some ‘shingling’ for this or something – roofing, plumbing – i.e. pipeline construction – these are core trade skills here). Enjoy.)

Going back to our main theme (the Fugue motive or subject as Bach would say), we are again injecting a little bit of semantic awareness to the query parsing process – the solution acts as if the computer knows what the word ‘red’ means! Note also that there are many other ‘red’ things out there that are not sofas and this solution doesn’t need to care about that whereas using autophrasing to solve this problem obviously would (i.e. LOTS of permutations when you get to the real world). What is also interesting is that we can chain these in a query parser pipeline so that we can add other semantic filters like this. For example, another use case for this client was ‘red leather sofa’ so we can pick off color and then material, filter query them both – wow, thats some smart ‘puter programmin’! This is also where entity extraction and tagging become interchangeable. If I have a regex extractor that knows how to find dates for example, I can wrap it in this fashion to create a tagger (the creation of fq=color:red is a kind of tagging operation).

But lets not get carried away, we have to match these tricks to use cases or we are doomed. That is why they are especially good for eCommerce because the use cases are fairly obvious and the vocabulary is constrained to our product set and the verbiage that we use when talking about it. But it would be cool if I could type in ‘watches priced between 10 and 50 dollars’ but wait – maybe with a little NLP sauce here … but as Steve Martin would say on his SNL gigs “Naaaahhhh!!!” (A brief infomercial here – click the ‘skip ad’ button if you want to get back to the Video. Our awesome new Lucidworks Fusion product adds the idea of Query Pipelines and this is where you can do lots of cool shit like this).

Point: Machine-Learning Lite

Another example of this basic technique of query introspection is to use machine learning techniques to infer the subject or topic of the query and to then boost similar results based on this categorization rather than relying on the exact words used in the query or even their synonyms. This is the recipe that I referred to in my previous post from Ingersoll et.al.’s masterpiece of non-fiction tech-writing “Taming Text” (if you remember from last time, Grant Ingersoll is my boss so I have to say nice things about him – not that I wouldn’t though, he’s a really nice guy and great to work for too). It is discussed in their chapter on building question and answer sites, but could be used as a boost to baseline TF/IDF ranking algorithms. In brief, we train up a machine learning ‘model’ by giving it pre-categorized chunks of text. This is why it is called semi-automated machine learning because we need to provide the seed crystals of knowledge and then watch it grow. A model in this sense is a real black boxy thing that stores pattern knowledge in some mathematical matrix soup that we can then regurgitate to match on new chunks of text that it hasn’t seen before (these things usually take three Math PhDs to explain so if you don’t get it the first time, drink a few beers or a Scotch or two and try again in the morning – or pain killers if that’s needed, but not all in one sitting – please!).

If we use the classifier’s output as another “vote” in our overall relevance recipe by boosting, we can hopefully redress some of the pitfalls in the BOW based TF/IDF approach which is a purely “automated” solution – it doesn’t get any help from Subject Matter Experts. That is, if we apply this at query time, we can boost documents that are similar and this similarity is based on the “seeds” planted by the SMEs that tagged the original training set (“Avast Mr. Smee – where’s that dratted Pan?”). So with a little effort up front, the value of knowledge built into the training set can be multiplied. If you do it right, you can get a good return on investment, but this is a case where one of our favorite consultant phrases “your own mileage may vary” clearly applies. (The other is “It Depends” – I wanted to trademark that one so I could collect royalties, but someone has already beat me to it – OK, I’ll pay up).

Melodic Inversion: Inferential search

Which brings me to the topic of what I call “Inferential Search” – the process by which the software infers “what” you want to find. Best Bets or landing pages is a brute-force example of this. Another example is one of the wicked awesomest things that the Brin & Page Minions did was to do the weather forecast popup for the Big Apple when I put in “New York City Weather forecast” into their engine – (no, I don’t think that they look like the little guys in Despicable Me but I didn’t want to go the Lord of the Rings route either – that’s really not cricket as the Brits would say – because as you may recall, I don’t want them to think too poorly of me – they do great stuff, but have too much of the world’s money and like all of us, I’d like to get a bigger piece of that action somehow).

Which reminds me of another example of “owful BOW wesults”. When you crawl our company website – lucidworksde.wpenginepowered.com and search for “apple” you hit on one of my posts because it discusses fixing multi-word synonyms for NYC like “Big Apple” – oops, now this one will hit too… sorry Cupertino you and I will just have to co-exist in that collection.

The use of machine learning techniques can also help to do landing pages if the output of ML-driven query introspection drives a landing page or best bets lookup table. This way, you don’t have to put all of the possible ways to ask for a weather report in your spreadsheet, you roll up a trainer, put in a bunch of candidate phrases and test and tweak, test and tweak, test and tweak (i.e. keep going on this until QA stops laughing). What you end up with is fuzzy matching driven best bets – another truly magical thing that is explained quite nicely in Taming Text.

Counterpoint: Designing Taxonomies to solve search problems

Here we touch on a real pet peeve of mine (I have been restraining myself so far in writing these blogs, can’t you tell?). Many (most?) taxonomies are designed in such an ad hoc way that they have absolutely no hope of solving any search problem, and this is one reason that they get a bad rap especially from the Machine-Learning Uber Alles crowd. As I have said before, every tool has a use – you don’t use a chain saw when a scalpel is called for and you didn’t see me bashing ML did you? Taxonomies CAN solve difficult and interesting search problems especially if they are designed to do that from the get go. Too often, they are created by people that understand language and vocabulary very well, but don’t understand search – yeah, like most of us (which gives me job security I hope). You have heard of “Garbage In, Garbage Out”? In a future blog post, I want to tackle this controversy head on by putting in my own 2 cents worth on this seemingly either/or debate – look its both a floor wax AND a desert topping. So with this rant out of the way, lets proceed (sorry, but I hope that you don’t mind – I’ve been holding in these things for awhile now … its like watching commercial TV – 5 minutes of program, 8 minutes of commercial – bada bing, bada boom).

As I said earlier, taxonomies and ontologies are ways of encoding knowledge. We just need a good “codec” for this – i.e. a way to encode knowledge and then use that to efficiently decode text. Imagine that you have a Company ontology that knows things like how big the company is, what vertical it is in, where it is headquartered, and so on (I’m sure that Reuters, Bloomberg or Taxonomy Warehouse has one of these). If you then see an article that mentions General Motors, Ford, and Chrysler you would think (and the ontology can help the search engine to think this too) that the article is about “U.S. automobile manufacturers”. If I throw in Toyota or BMW, then it shifts to “automobile manufacturers” period. However, if I added General Electric and Boeing, we are talking about “manufacturers” in general but if I then add Bank of America and Hyatt, we are now talking collectively about “Fortune 100 companies”.

These categories – manufacturers, auto manufacturers, Fortune 100 companies, can be structured as ontology “category” nodes and their instances (GM, Ford, GE, BofA) can be put under them as “evidence” nodes so that when the votes are cast, the category with the most and is closest to the voters wins. By closest to voters I mean that all of these scenarios could hit on the top category – Fortune 100, but if we had the first list, since “U.S. auto manufacturers” is more specific and no other child category is competing, we can go with that. So yes, there needs to be a little algorithmic wizardry applied here – like using the shortest knowledge graph path as a tie-breaker – but its not rocket science (see below)! And if this irrefutable logic doesn’t convince you, check out these great articles by Wendi Pohs and my good friend Stephanie Lemieux.

Counterexposition: Science vs(?) Engineering

Computer science is in fact known to be even more complex than rocket science, thank you very much! (As Bill Murray says in Ghostbusters – “Back off man, I’m a Scientist!”) Who’s richer now, the pencil neck geeks who didn’t go to the prom like Bill Gates, the Larrys or young Master Zuckerberg (don’t know about the prom part guys, but if I missed that one, sorry, don’t kill me) or … literally in Bill’s case, everybody else? Precisely my point! But wait a sec, what does that have to do with Science? Uh, maybe it doesn’t but what the hell lets move on.

And the ‘S’ word (no not that one!) brings me to another point. Face it guys, what we do is not really computer “science” its computer “engineering” and that is not meant as a slight (really it isn’t) – my Dad was an engineer and its an extremely important and honorable profession (rocket “science” never was, it was always just “engineering”). The guys that really do “computer science” tend to work in Academia or as Watson Fellows (sigh), their code needs major bullet-proofing, but the ideas are really freakin’ cool – I mean bleeding edge stuff, things that Spielberg featured in that killer flick Minority Report for example.

But Ahhh to live the life of an unlimited string of POCs with NSF funding up the wazzu (wait, right, that used to be a gravy train, but not since the Republicans got ahold of the Congressional Budget Committee and “Science” has become a dirty word in our political lexicon). So make sure that any whiz kid that comes out of a Masters or PhD program in Comp Sci knows how to write a good unit test and knows the distinction between the DEV, QA and PROD servers. I was looking at some demos at an EE Dept. of a major University that I was collaborating with (the names have been changed to protect the innocent here), and the grad students were doing some pretty cool stuff, but the stack traces on the monitor were spewing out so fast my head was spinning. The demos had nothing to do with what I am talking about here, but as discussed below, the title of this blog is about mixing Art and Science so again to paraphrase my favorite Ghostbuster, Peter Venkmann – back off, it’s my blog post.

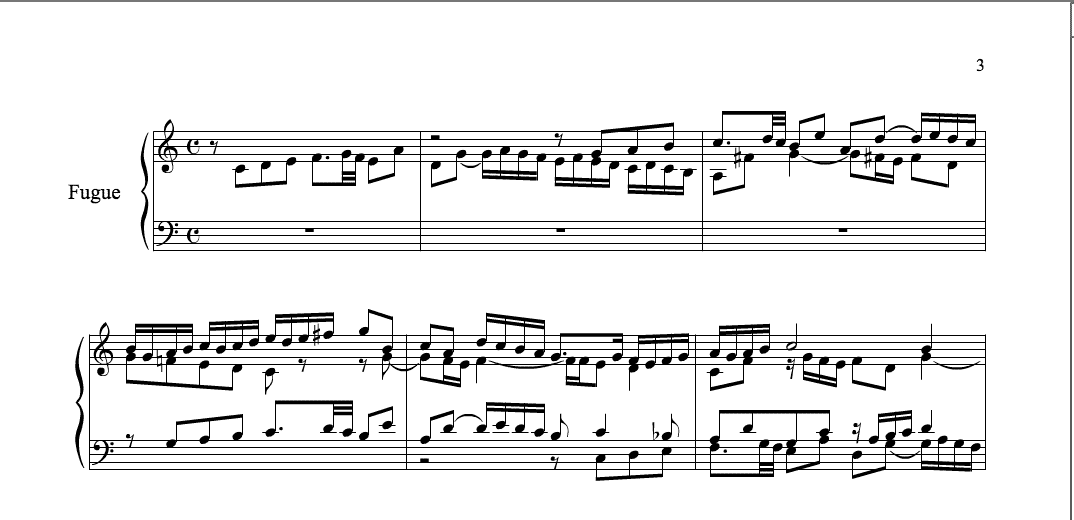

The Well Tempered Search Application – Da Capo al Coda

As I said, the title of this blog attempts to make a creative twist on the old saw about blending “Art and Science” – so for those of you troglodytes that didn’t get the reference by now, “Well Tempered” comes from Johann Sebastian Bach’s towering masterpiece “The Well Tempered Clavier” which consists of two books of 24 Preludes and Fugues in all 12 major and minor keys (what we cognoscenti refer to as “The 48”) – the graphics for these posts (eh OK, rants) are the opening stanzas from the Prelude and Fugue No. 1 in C Major from Book I (BWV 846) . There have been some covers of the Prelude by recording artists of the modern day like indie-alt-rocker M. Ward – so you may have heard this on Pandora, but if you are a Classical snob like me, maybe this link will do the trick. (Glenn Gould love ’em or hate ’em was one of a kind – the link is to the entire work – all 48 on UTube – so you can listen to this while you work for the next 3 and half hours or so – if you can stand Gould’s humming and the annoying UTube Ads which are difficult to filter out on headphones and tend to break into the music off-key and at random, respectively – but what the hell, educate your co-workers too by putting this on speaker. Awesome! My contribution to cultural awareness – Bach certainly needs to have a better Google Page Rank) Another masterpiece that I could have ripped off from ole JSB for this installment would be “The Art of the Fugue”. This is a good jumping off place because a lot of Bach’s creative genius was in turning musical patterns like chords into unrelenting streams of sheer majestic beauty, but don’t get me started on ripping Classical CDs into iTunes! Suffice it to say that “Song” does not work too well in this genre Steve, we need a better data model for Symphonies, Cantatas, Operas, Chamber works, Sonatas, etc. As you might have guessed, I have a much longer diatribe that maybe I’ll post somewhere (an outtakes DVD?) … you’re welcome – it’s getting late.

So getting back to the program, as you can see, there are a lot of powerful techniques that can be used to craft killer search applications without the resources of you know who – but that said, hey IT guys – Buy More Memory for chrissake! Thanks to Moore’s Law it’s pretty cheap now so don’t be such a tight-ass – that 640KB limit that seems to be stuck in you head is ancient history – we use ‘TB’ now – waaaay more space for us software guys to screw up! The Science is in how these algorithms work (like Mike McCandless and Robert Muir’s awesome work on Lucene Finite State Automata or Hossman’s wicked cool cursor marking algorithm) – the Art is in knowing when and where to apply them. You just need an array of tools and some craftsmanship to get the job done.

To conclude my blogcast, no search application is ever finished and there is no such thing as an average search application. Each one has its own unique lexical context, business rules and use cases – so the Search Appliance / Toaster model just doesn’t really fly too far (as I said in my last post, many may be flying towards the recycling bin as we speak). Building a Well Tempered Search Application means a focus on solving user experience problems with creative application of bitchin’ software routines, appropriate tool selection and intelligent data structures like taxonomies and other useful metadata.

LEARN MORE

Contact us today to learn how Lucidworks can help your team create powerful search and discovery applications for your customers and employees.